Big data is a well-known term in which huge amounts of data is generated by (possibly) everything around us round the clock in the digital format. The term ‘Big Data’ is most often used for predictive analytics or for certain advanced methods to extract important value from the data at hand. The several challenges involved with this term include data curation, search, analysis, storage, information privacy, storage, capture, transfer, sharing and visualization. The accuracy of this entire chunk of data may lead to more confident decision making, which reflects greater operational efficiency, reduced risk and cost reduction.

In Big Data, the datasets grow in size because they are gradually being filled with data from numerous wireless sensor networks, information-sensing mobile devices, cameras, software logs, aerials, radio frequency identification (RFID) readers, microphones. There is no surprise even if some 2.5 Exabyte of data were created every day. And the common fact is that nearly 90% of the world’s data has been generated with the last few years itself.

In 2012, Gartner updated its definition of this buzzword as follows “Big data is high volume, high velocity, and/or high variety information assets that require new forms of processing to enable enhanced decision making, insight discovery and process optimization.” [Source: Gartner]. Additionally, a new V is been added “veracity by some organization to describe it and hence here’s what it has been shaped into.

Volume – The quantity of data that is generated is very important in some circumstances. The name ‘Big Data’ itself involves a term which is related to size and hence the characteristic.

Variety – the next characteristic of big data is its variety. Which refers to the number of types of data.

Velocity – the term ‘velocity’ here refers to speed of production of data or how fast the data is generated and processed to meet the challenges and demands which lie in the path of development and growth.

Veracity – the value of data being captured can differ greatly. Validity of analysis depends on the veracity of the source data.

Complexity – data management can become a very compound process, particularly when the large volumes of data come from diverse sources.

If you are still wondering how zetabytes of data is being generated, the best way to understand is by looking right at the sources. These sources are categorized based on their functionality and usage and here’s a compiled list of all the Big Data sources:

- Black Box: A black box is an essential component of air-borne vehicles. It records voices of the entire flight crew, all the information of the flight including all the performance parameters.

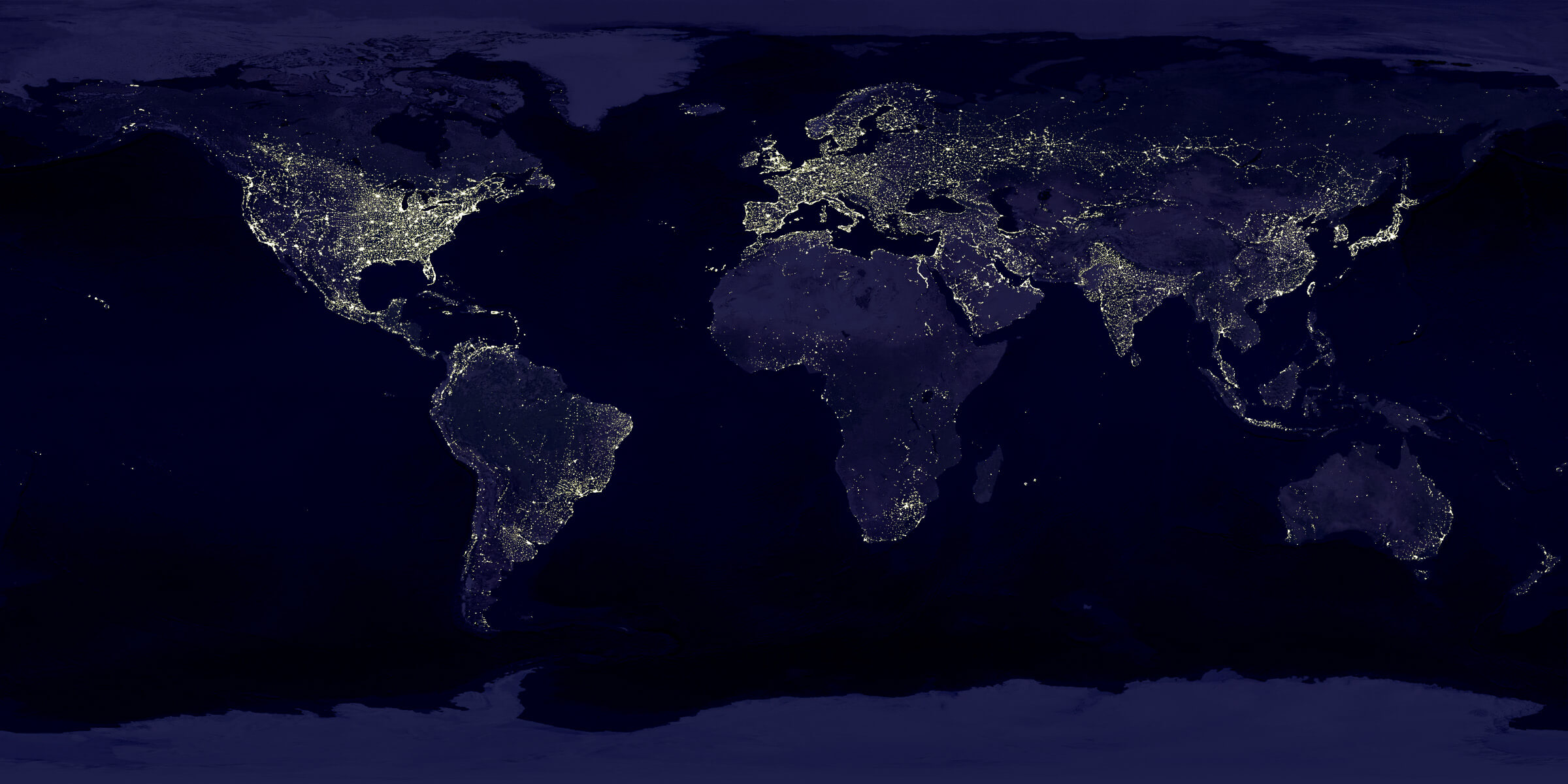

- Social Media: Data from this source is on the high with the world becoming more connected and sharing is undoubtedly the new order of the day. Platform such as Facebook and Whatsapp have always attracted more people and now hold data millions of people from all remote corners of the world.

- Stock Exchange: The stock exchange market is a very big contributor as well. All statistics related to buying and selling of shares, the behavior of shares in different companies and customer information are al being piled on.

These are the top players in this sphere. Several other domains are also actively involved in building up the world’s digital data.